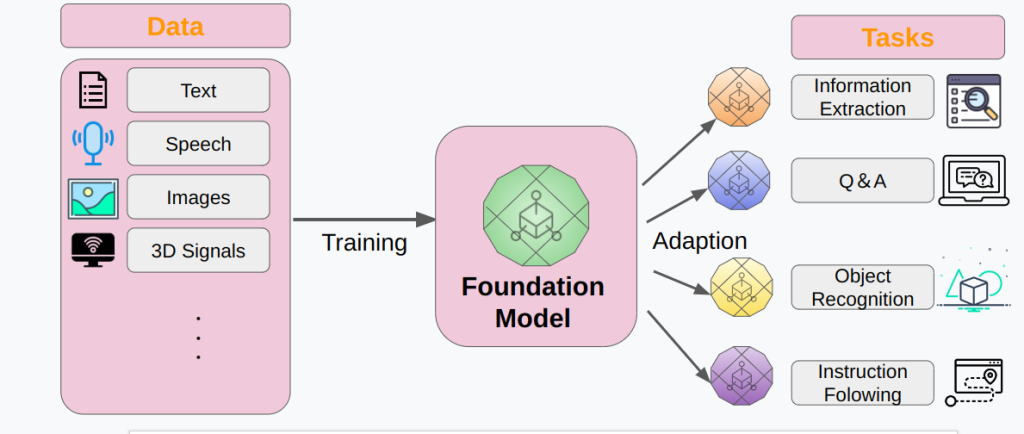

The term “foundational model” refers to an AI/machine learning model that has undergone a two-stage training process: it is pre-trained on a large amount of unlabeled data, and then fine-tuned to be adaptable to a wide range of downstream tasks.

A foundation model is an AI/machine learning model trained ( pre-learned ) using a large amount of data. This training is generally performed using self-supervised learning that uses data without correct labels. The foundation model is then retrained (mainly fine-tuned ) to adapt to a wide range of downstream tasks. This two-stage training process is distinctive, and a major feature is that a single foundation model can adapt to a variety of tasks (multitasking ability), as shown in the above figure (Figure 1).

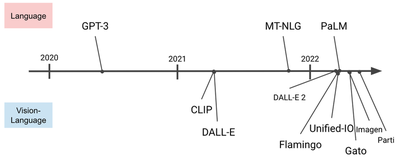

The term “foundational model” was popularized by Stanford University’s Human-Centered AI Institute in 2021, and has gradually come into the spotlight, especially among AI-related researchers and engineers, in 2022. Representative examples include GPT-3 announced by OpenAI and CLIP (used in “Stable Diffusion”, for example), also developed by OpenAI. With the appearance of GPT-4 in 2023, foundational models are still evolving and are being applied in a variety of fields.

Why is foundational modeling important?

Foundational models have the potential to significantly change the machine learning lifecycle. Currently, it would cost millions of dollars to develop a foundation model from scratch, but it will be useful in the long run. It will be faster and cheaper for data scientists to develop new machine-learning applications using pre-trained FMs than to train their machine-learning models from scratch.

One potential use case is the automation of tasks and processes that specifically require reasoning capabilities. Applications of the foundational model include:

- Customer Support

- Language Translation

- Content Generation

- Copywriting

- Image Classification

- High-resolution image creation and editing

- Document Extraction

- Robotics

- medical care

- Autonomous Vehicles

How the Foundational Model Works

Two characteristics that make foundational models work are transfer learning and scale. Transfer learning refers to the ability of a model to apply information about one situation to another, building on its internal “knowledge”.

Scale refers to hardware, specifically graphics processing units (GPUs), which allow models to perform multiple calculations simultaneously (also known as parallel processing). GPUs are essential for training and deploying deep learning models, including foundational models, because they provide the power to quickly crunch data and perform complex statistical calculations.

Deep Learning and Foundational ModelsMany

foundational models, especially those used in natural language processing (NLP), computer vision, and audio processing, are pre-trained using deep learning techniques. Deep learning is the technology behind many (but not all) foundational models and has been the driving force behind many advances in the field. Deep learning, also known as deep neural learning or deep neural networks, teaches computers through observation, mimicking the way humans acquire knowledge.

Transformers and Foundation Models

While not all foundation models use Transformers, the Transformer architecture has proven to be a popular way to build foundation models that involve text, such as ChatGPT, BERT, and DALL-E 2. Transformers enhance the capabilities of ML models by allowing them to capture contextual relationships and dependencies between elements in a set of data. Transformers are a type of artificial neural network (ANN) that are used in NLP models but are not typically used in ML models that use computer vision or speech processing models alone.

Use cases for the foundation model

Once a foundation model is trained, it can harness the knowledge it gains from a vast pool of data to help solve problems. This skill can provide valuable insights and contribute to your organization in a variety of ways. Some common tasks that foundation models can perform include:

Natural Language Processing (NLP)

Foundational models trained with NLP are able to recognize context, grammar, and linguistic structure to generate and extract information from the data they are trained on. Further fine-tuning NLP models by training them to associate text with sentiment (positive, negative, neutral) can be useful for businesses looking to analyze written messages such as customer feedback, online reviews, and social media posts. The field of NLP is broad and includes the development and application of large language models (LLMs).

When a computer vision

model can recognize basic shapes and features, it can begin to identify patterns. With further fine-tuning, computer vision models can enable automated content moderation, facial recognition, and image classification. The models can also generate new images based on the patterns they have learned.

When audio/speech processing

models can recognize speech elements, they can derive meaning from the human voice, enabling more efficient and inclusive communication. Features such as virtual assistants, multi-language support, voice command, and transcription improve accessibility and productivity.

With further fine-tuning, machine learning systems can be designed to be more specialized to address industry-specific needs, such as fraud detection for financial institutions, gene sequencing for healthcare, or chatbots for customer service.

Example of a foundation model

I would like to briefly explain what kind of base models are available.

Below, we describe the representative foundational models for GPT-3 and beyond, and the input-output relationships of the foundational models.

example of a foundation model

As mentioned above, there are various types of board models, and you can choose the board model you want to use depending on your purpose.

*In fact, we at TRAIL also use a foundational model called CLIP (Contrastive Language-Image Pretraining). CLIP learns from a large number of image-description pairs and performs zero-shot image classification.

What are some more examples of foundational models?

The number and size of foundation models on the market is growing rapidly. There are currently dozens of different models available. Below is a list of notable foundation models released since 2018:

BERT

Bidirectional Encoder Representations from Transformers (BERT), released in 2018, was one of the early foundational models. BERT is a bidirectional model that analyzes the context of an entire sequence to make predictions. It was trained on a corpus of plain text and Wikipedia, with 3.3 billion tokens (words) and 340 million parameters. BERT can answer questions, predict sentences, and translate text.

GPT

The Generative Pre-trained Transformer (GPT) model was developed by OpenAI in 2018. It uses a 12-layer transformer decoder with self-attention mechanism. It was trained on the BookCorpus dataset, which contains over 11,000 free novels. The unique feature of GPT-1 is that it is capable of zero-shot learning.

GPT-2 was released in 2019 and was trained by OpenAI using 1.5 billion parameters (GPT-1 used 117 million parameters). GPT-3 uses a 96-layer neural network and 175 billion parameters, trained on the 500 billion-word Common Crawl dataset. The popular chatbot ChatGPT is based on GPT-3.5. And the latest version, GPT-4, will be released in late 2022 and successfully passed the Uniform Bar Exam with a score of 297 (76%).

AI21 Jurassic

Jurassic-1, released in 2021, is a 76-layer autoregressive language model with 178 billion parameters. Jurassic-1 generates human-like text and solves complex tasks, with performance comparable to GPT-3.

In March 2023, AI21 Labs released Jurrassic-2, which showed improved ability to follow instructions and language skills.

Claude

Claude 2 is Anthropic’s cutting-edge model of thoughtful dialogue, content creation, complex reasoning, creativity, and coding, and is built with Constitution AI. You can input up to 100,000 tokens into each prompt in Claude 2, meaning it can handle hundreds of pages of text or even entire books. Claude 2 also lets you write longer documents, such as notes or stories, that can be several thousand tokens long, compared to previous versions.

Cohere

Cohere has two LLMs: a generation model with similar capabilities to GPT-3, and a representation model aimed at understanding language. Cohere only has 52 billion parameters, but outperforms GPT-3 in many ways.

Stable Diffusion

Stable Diffusion is a text-to-image model that can generate lifelike, high-definition images. It was released in 2022 and employs a diffusion model that learns how to create images using noise and denoising techniques.

The model is smaller than competing diffusion technologies such as DALL-E 2 and does not require large computing infrastructure. Stable Diffusion works on both regular graphics cards and smartphones powered by the Snapdragon Gen2 platform.

Read more about Stable Diffusion »

BLOOM

BLOOM is a multilingual model with an architecture similar to GPT-3. It was developed in 2022 in collaboration with over 1,000 scientists and the Hugging Space team. The model has 176 billion parameters and took three and a half months to train using 384 Nvidia A100 GPUs. The BLOOM checkpoint requires 330 GB of storage, but can run on a standalone PC with 16 GB of RAM. BLOOM can create text in 46 languages and write code in 13 programming languages.

Hugging Face

Hugging Face is a platform that provides open-source tools for building and deploying machine learning models. It serves as a community hub where developers can share and explore models and datasets. Individual membership is free, but paid subscriptions offer higher levels of access. It provides public access to approximately 200,000 models and 30,000 datasets.

Conclusion

We have introduced an overview of foundational models, their importance, how they work, and some common examples of them.

❤️ If you liked the article, like and subscribe to my channel, “Securnerd”.

👍 If you have any questions or if I would like to discuss the described hacking tools in more detail, then write in the comments. Your opinion is very important to me!